LLMs.txt File Settings

What is the LLMs.txt File?

Control how AI search engines and language model crawlers can access and use your website’s content.

AI is changing how people find information online. Instead of just typing keywords into Google, more and more users are asking AI tools like ChatGPT or Gemini for answers.

Here’s the problem: if AI can’t easily understand and read your website, it won’t include your content in its answers. That means missed visibility and traffic.

That’s where the LLMs.txt file comes in.

Think of the LLMs.txt file as a guidebook for artificial intelligence. Just like a robots.txt file gives instructions to traditional search engines like Google, LLMs.txt is designed for AI crawlers and large language models (LLMs) — the systems behind ChatGPT, Claude, Gemini, and AI-powered search.

In Short

- llms.txt is similar in concept to robots.txt, but specifically tailored for LLMs (Large Language Models)

- The goal is to guide AI crawlers (which gather data from sites to train LLMs) on what content they can or cannot use from your site.

- By using the llms.txt feature from Squirrly SEO, you can create and configure your own llms.txt file directly from the plugin.

- Once enabled, the file will be available at https://yourdomain.com/llms.txt and will act as a set of rules for AI crawlers that want to use your content to train LLMs.

[Benefits] Why is the LLMs.txt File Important?

When AI tools can quickly understand your site, they’re more likely to include your content in AI-powered answers and search results.

Here are the main benefits of using the LLMs.txt file:

1. Better visibility in AI-driven Search Engines

Without an LLMs.txt file, AI crawlers may struggle to navigate your site or understand which pages matter most. That confusion makes it less likely your content will show up in AI-powered search results or summaries.

By providing a clean, structured index, you help AI systems quickly find and interpret your most valuable content — which increases the odds of your site being included in AI-generated answers.

2. Control over AI usage

Not every part of your website should be accessible to AI. Think about checkout pages, admin dashboards, member-only content, or premium resources. With LLMs.txt, you decide what’s open and what’s off-limits.

That gives you far more control over how your content is used by AI companies.

3. Protection of sensitive or proprietary data

AI crawlers are hungry for data, but you don’t want them training on sensitive material.

LLMs.txt lets you block specific sections, like customer data, paid content, or internal resources. This ensures that private information doesn’t get scraped or reused without your permission.

4. A foundation for GEO (Generative Engine Optimization)

Just like SEO helps your site rank in Google, Generative Engine Optimization (GEO) focuses on making your content easier for AI tools to understand and feature. LLMs.txt is an integral component of GEO, ensuring your content is presented to AI in a clear, structured format.

As AI-driven search becomes more common, implementing llms.txt now can put you ahead of the competition.

Now that you know the key benefits of using an LLMs.txt file, the next question is:

How do you actually set this up for your website? We’ll cover that next.

Easily Create an LLMs.txt file for Your Website using Squirrly SEO

If you’re using Squirrly SEO, the good news is that you don’t need to create the file manually.

The plugin includes a dedicated LLMs.txt feature that lets you enable and configure rules for AI crawlers and language models. This way, you control how your site’s content can be accessed and used by AI search engines — all from your WordPress dashboard.

Let’s walk through where to find this feature in Squirrly SEO and how to set it up.

How to Activate the LLMs.txt File Feature

- Go to Squirrly SEO > All Features > Type “LLMs” in the search field > Switch the toggle to the right to activate the feature.

How to Access the LLMs.txt File Feature

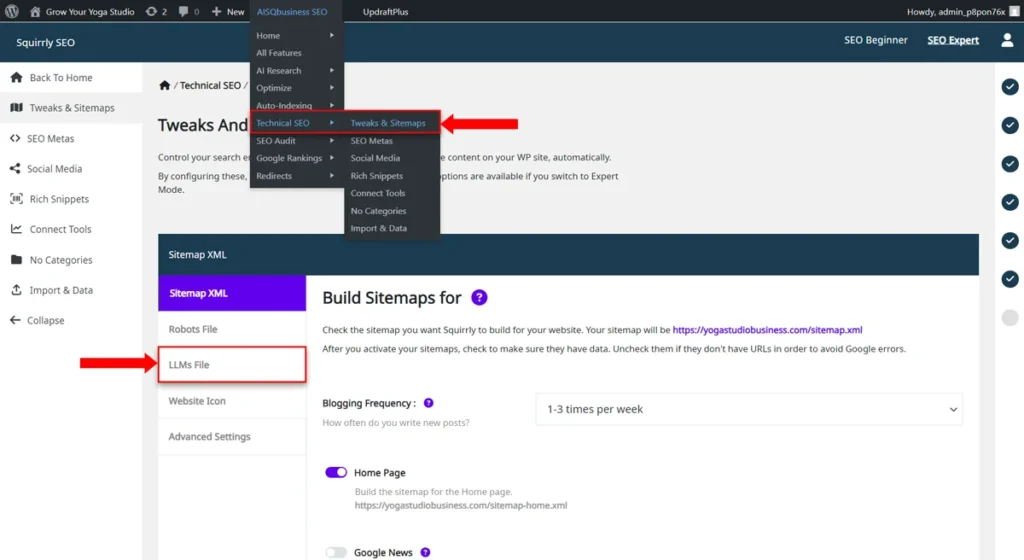

- After activating the feature, navigate to Squirrly SEO > Technical SEO > Tweaks & Sitemaps > LLMs File to access it.

Default LLMs.txt Code

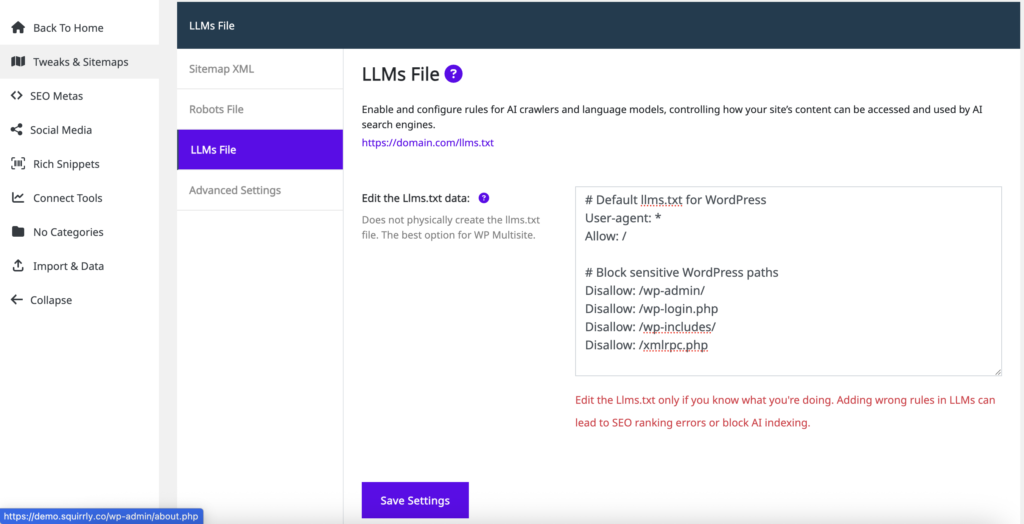

- To reach this section, go to: Squirrly SEO > Technical SEO > Tweaks & Sitemap > LLMs File > Edit the LLMs.txt data

In the image below, you can see how the default code for the LLMs.txt file looks:

# Default llms.txt for WordPress

User-agent: *

Allow: /

# Block sensitive WordPress paths

Disallow: /wp-admin/

Disallow: /wp-login.php

Disallow: /wp-includes/

Disallow: /xmlrpc.php

Each line gives instructions:

User-agent: *→ The*means this rule applies to all crawlers. All crawlers are allowed to access your site.Allow: /→ Crawlers are allowed to access the whole site.Disallow: /wp-admin/→ Block crawlers from the WordPress admin area.Disallow: /wp-login.php→ Block crawlers from the login page.Disallow: /wp-includes/→ Block crawlers from internal WordPress files.Disallow: /xmlrpc.php→ Block the XML-RPC API (often exploited by bots).

So in short: it lets AI crawlers index your site but keeps them away from sensitive backend paths.

Once enabled, the llms.txt file will be available at:

https://www.your-domain.com/llms.txt

Replace your-domain.com with your actual domain name to view it.

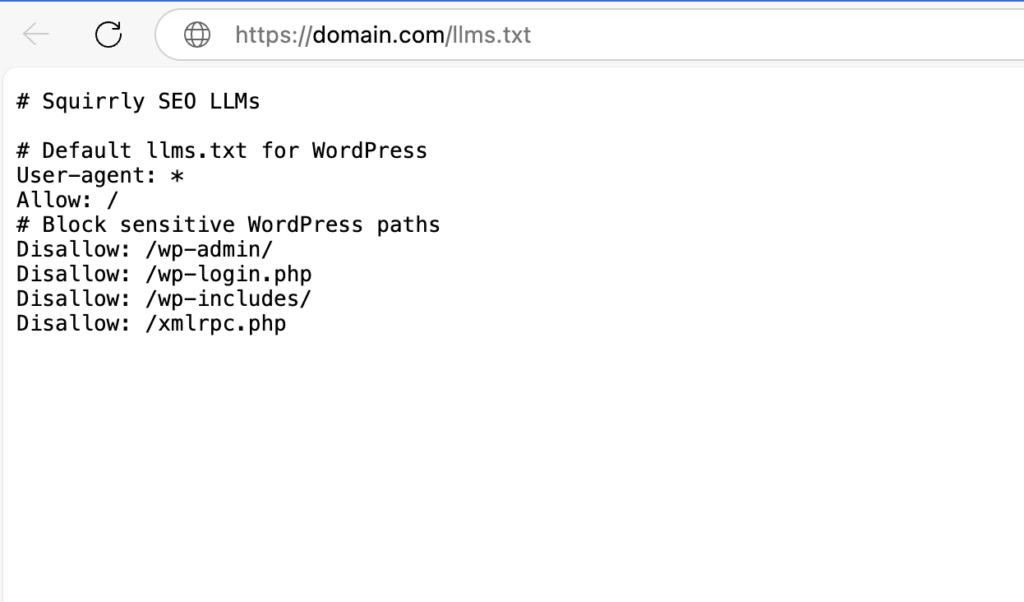

This is an example of how the code appears in: your-domain.com/llms.txt

Custom LLMs.txt Code: Edit data

- To reach this section, go to: Squirrly SEO > Technical SEO > Tweaks & Sitemap > LLMs File > Edit the LLMs.txt data

Just like with Robots.txt, Squirrly also gives you the option to edit and customize your LLMs.txt data. You can add your own rules to:

- Allow or block specific AI crawlers.

- Restrict access to private or premium areas of your site.

- Fine-tune how AI systems interact with your content.

⚠️ Remember: Only edit the LLMs.txt file if you know exactly what you’re doing. Adding the wrong rules can:

- Block AI crawlers completely.

- Prevent your content from being included in AI-driven search engines.

- Create conflicts with how your site is indexed.

Best Practices for Editing LLMs.txt in Squirrly SEO

Here are some best practices to follow:

1. Start with the default setup

The default LLMs.txt rules provided by Squirrly already block sensitive WordPress paths (like /wp-admin/ and /wp-login.php) while allowing AI crawlers to access your public content. For most websites, this is a safe starting point.

2. Only edit if you understand the impact

One wrong rule can block AI indexing. If you’re not sure, leave the defaults as they are until you’ve learned more.

3. Highlight your most important content

Use the LLMs.txt file to guide AI toward your cornerstone content — things like your About page, main product or service pages, and key blog posts. Think of it as a curated list.

4. Protect private or premium areas

Always disallow AI crawlers from accessing sensitive areas like checkout pages, membership sections, or internal resources. This prevents private content from being scraped or misused.

0 Comments